Newsletter Title: Forever COBOL

Forever COBOLA seemingly dead programming language from the middle of the previous century is being hyper-charged by the latest and most advanced LLMs.

By all measures, COBOL, the common business-oriented language that originated in the late 1950s, was a pretty big success. You probably haven’t heard of it. Those of us born about the same time as this latent computing language were exposed to it in the late 70s and 80s, and especially on college campuses where mainframes had gotten their first footings in research roles.

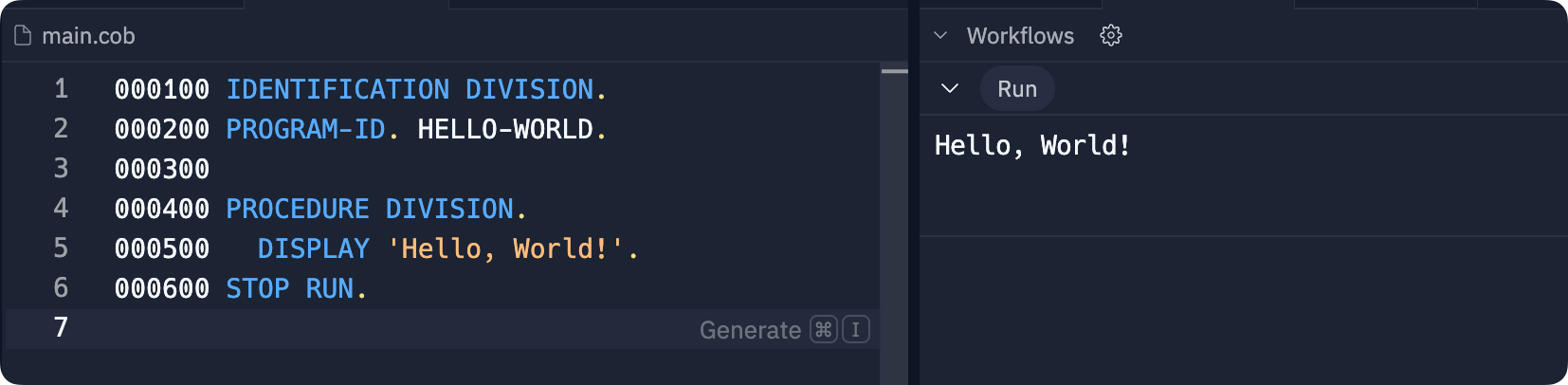

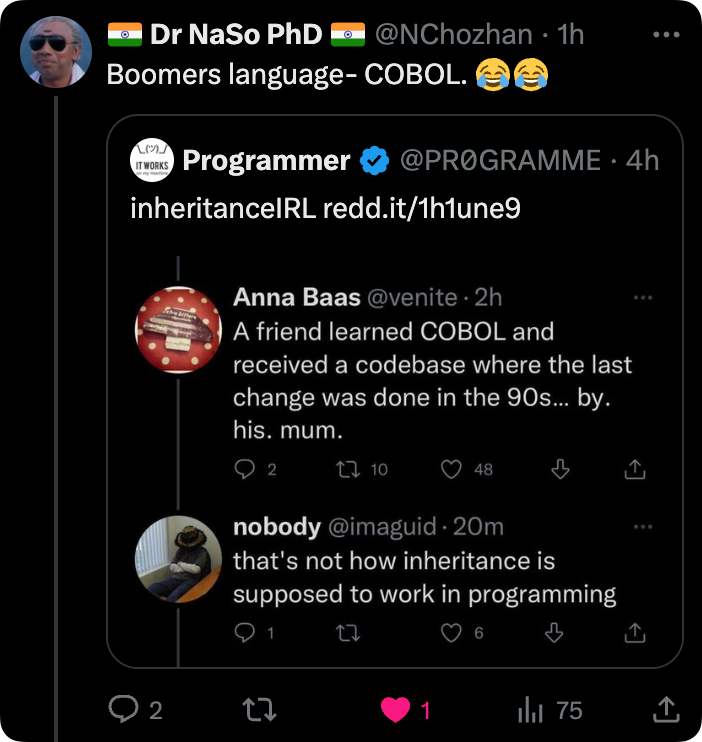

Setting aside the few remaining COBOL consultants and analysts, anyone who might be vaguely aware of it mostly believe it’s a dead language. It seems dead enough. It's often the subject of jokes and you don’t—as a rule—hear Gen Z’ers casually dropping its namesake around Starbucks as they plot new startup strategies. Yet, it continues to power many legacy systems in industries like finance, banking operations, and government. As dead as this living and breathing dinosaur may be, you can get a free account in Replit and build your first COBOL app in about 60 seconds. In the 80s I never gave the all-uppercase command format a second thought. Today, I feel like this language is always shouting. In way, it has every right to shout. It’s generally faster than C and C++. It blows past Java applications, and it’ll make Javascript seem like it could be rear-ended by a glacier when performing data-frame sorts¹. Strangely, multiple generations of engineers have worked with COBOL. For some, it’s a family thing. The irony of “inheriting” a COBOL system from your mom doesn’t escape me. If you swiped a credit card at an ATM recently, there’s a 95% chance your transaction was processed with COBOL. Let that sink in. Interestingly, the ATM is roughly the same age as COBOL - both remain highly relevant to us despite one being highly visible and the other primarily invisible. Impertinent is a reader-supported publication. To receive new posts and support my work, consider becoming a free or paid subscriber. About $20B in cash flows out of the mouths of ATMs in America every year, almost all of which are made possible with COBOL. Banks are deeply dependent on COBOL for several other critical processing systems. Airlines use COBOL. Sabre was the first global distribution system (GDS), and it was written in COBOL. Many of the greatest successful IT systems emerged because of COBOL. Some have transitioned to modern languages, but most have not.² In 2024, an estimated 70% to 80% of the world's business transactions will be processed using COBOL, highlighting its significant role in systems that millions depend on daily. The digital economy relies heavily on COBOL's resilience, sustained performance, and efficiency, especially in online transactions, banking operations, and public sector databases. Companies that keep COBOL-based systems working say that 95 percent of ATM transactions pass through COBOL programs, 80 percent of in-person transactions rely on them, and over 40 percent of banks still use COBOL as the foundation of their systems. Surprising to many industry experts, the COBOL segment is estimated to be three times bigger than first thought. At approximately 850 billion lines and growing, skilled workers in this programming language are dwindling.

Converting COBOL systems to Java systems can be challenging due to the significant differences in language structure, programming paradigms (procedural vs. object-oriented), and data handling. This can lead to complex migration processes, potential performance issues, and the need for extensive testing. Also, finding developers skilled in COBOL and Java can be extremely difficult. As a result, a direct conversion may result in a system informally referred to as "JOBOL," which retains many COBOL-like characteristics within the Java framework, complicating maintenance and constraining agility. One Hacker News contributor summed it up like this:

COBOL's original promise was that, given its human-like text, we wouldn't need programmers. Like "low code" platforms and now LLM-generated code, COBOL would be the game changer that reduced dependencies on programmers and possibly eliminated them. They were wrong then, and the no/low-code era has assured us that we need to write code. Generative AI is also on a trajectory that, in all likelihood, will prove this yet again. Programmers are here for a while longer, but their longevity will be increasingly gated by their ability to convey requirements with written or verbal precision. As I wrote here…

The more I examine the work of software developers, the more I realize engineers are word-averse. For many engineers, English is their second language. They enjoy speaking the language of science and math through tokenized instruction for computers. They focus on control and they know from experience that good software is a function of unambiguous instructions. But when it comes to writing requirements - forget about it. GPT prompts are typically not in the software engineers’ wheelhouse. Enterprise IT projects typically fail to make it into production about 66%³ of the time. Requirements management is at the root of this extremely high failure rate. The problem is that the average person doesn't know how to explain and solve a problem in sufficient detail to compel engineering teams to create solid working solutions. And that—by definition—is what a programmer is supposed to do. There are many aspects to consider if something like Watsonx®⁴ is adopted as a strategy for such a big transition. Watsonx® typically encounters several challenges during the translation process. The complexity of legacy COBOL code, with its unique syntax and logic, often leads to issues such as missing context, intricate data structures, and the need for significant manual adjustments to ensure the generated Java code functions correctly and meets the business requirements that are so eloquently expressed in COBOL. Additionally, factors like poorly structured COBOL code, a lack of proper documentation, and inherent limitations in the AI model can contribute to these and other hidden challenges. But the biggest challenge of all is rarely discussed—people. In many organizations, if you wanted to transition to modern tools, you would need to replace more than 50% of your technical staff. At the same time, it is becoming increasingly difficult to find replacements for those who leave and who are deeply familiar with the aging toolset. This situation cannot persist indefinitely. Until you reach a certain threshold of staff who are comfortable with a modern software development environment, the current stasis may continue for quite some time, possibly forever. The unique demographics of mainframe teams are influenced by the fact that many members entered the tech industry before microcomputers became widespread. These individuals often adhere to traditional methods, as they began their careers at a time when tasks like stacking tapes in a data center or transitioning from accounting were common. This approach contrasts sharply with the broader tech landscape, which has seen significant growth—particularly since the late 1980s and early 1990s—resulting in a much younger workforce. In many other sectors, programming became more popular later, leading to a more diverse and younger group of professionals compared to the mainframe environment. Stemming from its history as the oldest computing platform still in use, the cultural aspect of mainframes is often overlooked and not well understood by those outside this realm. This presents unique challenges that are not primarily related to the more familiar issue of converting COBOL code into equivalent code in other programming languages. One final point concerning conversion is that COBOL (unlike Java) is extremely efficient, often matching the speed of C and sometimes utilizing memory more effectively for specific types of business objectives it was originally intended to address. Java's popularity on mainframes stems largely from the licensing advantages that IBM was compelled to grant due to its higher resource consumption, as well as the fact that their JVM (originally from Sun Microsystems⁵) is a well-regarded implementation favored by teams outside of mainframe environments. However, if the trend shifts heavily towards Java, those licensing advantages may evaporate. And if they do, the costs will make the conversion pathway far more problematic than the technical mismatch already poses. It’s clear that more investment needs to be made in C/C++ and possibly Go rather than Java, at least in the beginning. It is possible to write portable applications in C/C++ and Go without incurring the significant performance penalties typically associated with transitioning to a Java stack. Some developers even believe Rust is fast enough and type-safe to lean into COBOL transitions. There’s even a simple COBOL-to-RUST converter⁶. Organizations that have tried to transition quickly with the aid of tools like Watsonx® realize they have simply created a second unrecognizable code base.

With the backstory and modern-day reliance on COBOL in mind, let’s turn attention to the collision of generative AI and COBOL. In my advancing age, this interests me most because I’m curious about how ancient computing languages will blend with modern, contemporary technologies. Artificial intelligence seems like an excellent context to examine this; both are at the ends of entirely different spectrums. Yet, here they are on the verge of co-mingling, whatever that means. When contemplating ways that generative AI and COBOL might intersect, my first instinct is to imagine simply Github CoPilot for COBOL⁷. A paired programmer who will assist you as you work. The only offering I’m aware of is Phase Change, and they’re not currently shipping COBOL Colleague™, as evidenced by this scrolling banner. I just puked a little in my mouth. I thought I saw my last web banner in 2004. COBOL was created in the 1950s, and the Phase Change website looks like it was made in the 1990s. Setting aside several serious pitch issues, the story is compelling.

This resonates with me.

Days? Really? That seems like a stretch. Let’s be realistic; it’s likely many days at minimum. However, there’s no debate. Knowledge attrition in engineering teams is a real threat to these critical applications' operational maintenance and sustainability.

No argument here. The value proposition of blending generative AI technology with well-trained [COBOL-aware] LLMs is very promising. But at least one devil exists in that detail and that devil is holding aces.

Large language models are pretty good at writing C, Python, and Javascript. Each of these languages are well-represented in the open-source communities. COBOL? Well, let’s just say it doesn’t get out and party much with the cool kids. So, there’s not an abundance of code for training LLMs to get really competent with this language. Bloop.ai dives in deep to help us assess how well LLMs can generate COBOL. But, is this the question we should be asking? In my view, it doesn’t align well with the business requirements that are so obvious.

You DON’T need LLMs to address these requirements. You DO need LLMs to work with these resources and create information advantages and hyper-productivity for those saddled with the challenges of maintaining and improving COBOL systems. But, this is true of most use cases where generative AI is useful. Data is the new oil for immovable legacy systems. And by data, I mean deeply chunkified vectors stored in high-performance databases that transform opaque black boxes of the previous century into extremely transparent views of logic, rules, and functional business processes. It’s easy to surmise that Phase Change apparently automates the creation of information systems that represent a first-principles model of a COBOL system. By focusing on the business rules that lead to writing output values—the heart of most COBOL batch processing systems—business functions are better understood because they provide engineers and managers with the minimal slices of code that implement the underlying business rules of the application. Armed with a comprehensive knowledge graph, generative AI is then able to help both technical and non-technical stakeholders understand it, query it, and more easily formulate maintenance and enhancement strategies. These are the usual and customary advantages of a highly focused datastore—no rocket science needed; just science. Knives are drawn. The team is now able to go far beyond anything previously imagined if—and only if—the datastore is hyper-granular and mapped into a well-designed ontology¹⁰. A finer point is worth mentioning concerning the use of AI—we shouldn’t ask the LLM to make sense of COBOL code. Generative AI can quickly get off the rails in complex systems, and especially where adequate open-source projects do not exist to serve as training resources. Instead, they would be wise to ask it to describe formalized facts about the COBOL solutions, negating the chance for hallucinations. In that sense, Phase Change is essentially fine-tuning the LLM with lots of contextual data. Perhaps multi-shot fine-tuning is what they’re doing. The better the data, the better the inferences about questions such as:

Or…

Freakonomics has a slogan - "the hidden side of everything". This resonates with me across many observations in life, business, and technology. Corruption? Follow the money. Enlightenment? Follow the data. But the freaks don't just craft a spreadsheet and grab a podcast mic. They dig until they uncover the hidden side. Not every excavation yields a noteworthy story. Some are dead ends. But many hold deep insights that change behavior when fully understood. Irrespective of which strategy makes sense—convert vs. maintain—large COBOL systems must be deeply understood and in a context of their current production environments—full stop. Enterprises that own these systems are facing increasing risks as their skilled workforce declines. They need a strategic approach that assumes COBOL is forever, but which can also aid them if they ultimately transition to modern languages. In 1975, no one working working in the COBOL industry had Peak COBOL / 2025 on their BINGO card. Fifty years¹² is a good run, but 2025 is likely COBOL’s half-life. In 2075 there’s a non-zero probability COBOL will still be a thing. Here’s why…

Forever COBOL. Get used to it. It will still be running long after you die. 1 COBOL is not faster by all measures, but when it comes to the things that COBOL is mostly used for, it wins hands-down against almost every scripting language. 2 And by many, I mean almost 850 billion lines of production code. The legacy investment and the cost of transitioning to new systems are substantial regarding financial outlay and human capital. COBOL systems are stable, and the risk associated with migrating to new, unproven systems can be a deterrent, making sustained reliance on COBOL systems a more favorable option. 3 According to the Standish Group’s Annual CHAOS 2020 report, 66% of technology projects (based on the analysis of 50,000 projects globally) end in partial or total failure. 4 IBM’s bid to use generative AI to convert COBOL to Java. 5 IBM's JVM (Java Virtual Machine) originated from Sun Microsystems, which was the company that initially developed the Java programming language, meaning the core technology behind the JVM was created by Sun; however, IBM became a significant contributor to the Java ecosystem by developing their own high-performance JVM implementation called J9, and actively participated in shaping the Java standards and open-sourcing efforts, even sometimes pushing back against Sun's perceived control over the platform. 6 Quaint and interesting, but not likely very useful. 7 Unfortunately, for a wanna-be COBOL developer, it doesn’t exist. 8 Surprise! It’s a knowledge management challenge, not an AI or LLM challenge. 9 “Bob’s Your Uncle”: is an expression that means something is easy to do, or that something is complete or taken care of. "Just complete the form, pay the fee, and Bob's your uncle! The phrase is commonly used in the United Kingdom and Commonwealth countries. It's similar to the French expression "et voilà!" 10 Ontologies present proper connectors, and oddly, this is similar to the way I manage 30+ years of email. 11 They obviously include defects, new feature requests, and new requirements in the knowledge graph. 12 It’s 60 years. Invite your friends and earn rewardsIf you enjoy Impertinent, share it with your friends and earn rewards when they subscribe. © 2024 Bill French |