My recent work on a large agent development project has forced me into some deep, dark tunnels of uncertainty. Much of this work ventures into the unknown. It's basic research, hopefully followed by development. While many of my team's experiences and outcomes are dead-ends, few things have become foundational knowledge that may be valuable in the long run. How long that view remains uncertain is itself certain.

Without question, I predicted generative AI would eventually move toward WebSockets - APIs are too slow for solutions that must seem as brilliant as they are fast.

Tula Masterman inspired this post with AI Agents: The Intersection of Tool Calling and Reasoning in Generative AI.

Reasoning through evaluation and planning relates to an agent’s ability to effectively breakdown a problem by iteratively planning, assessing progress, and adjusting its approach until the task is completed. Techniques like Chain-of-Thought (CoT), ReAct, and Prompt Decomposition are all patterns designed to improve the model’s ability to reason strategically by breaking down tasks to solve them correctly. This type of reasoning is more macro-level, ensuring the task is completed correctly by working iteratively and taking into account the results from each stage.

Reasoning through tool use relates to the agents ability to effectively interact with it’s environment, deciding which tools to call and how to structure each call. These tools enable the agent to retrieve data, execute code, call APIs, and more. The strength of this type of reasoning lies in the proper execution of tool calls rather than reflecting on the results from the call.

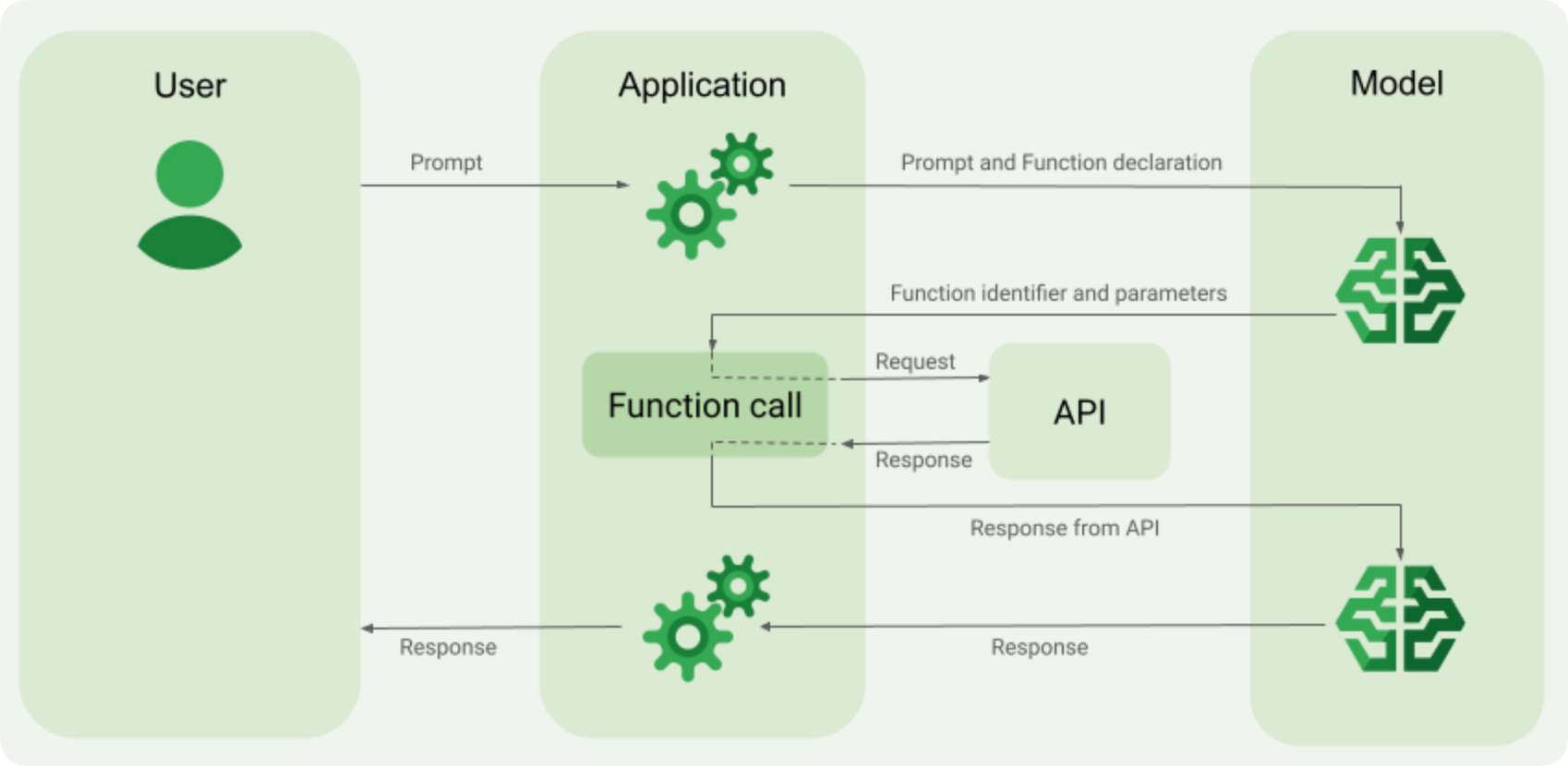

Google does a pretty good job explaining the overall architecture of tool-calling.

Here are some other undeniable learnings that I’ve discovered and are bankable.

Chatting with LLMs will wane; audio (spoken words) will emerge as the primary conduit, not necessarily always communicated by humans. I've always said that we can't chat our way to hyper-productivity. The real-time voice-driven AI solutions age has arrived, and the evidence is piling up. OpenAI's new Real-time Voice API (beta), Deepgram's Voice API (beta), and AssemblyAI's real-time platform for building agents are indicators that chatting will soon be a distant memory for many users.

Tool-calling is far more powerful than I ever gave it credit for. I was not thrilled when OpenAI first announced integrated functions in GPT-3. I regarded it as a card trick, likely roadkill around the next AI bend. Surprisingly, it stuck for more than an AI minute. I was wrong because I didn’t anticipate the added reasoning skills that LLMs could achieve. Asynchronous [parallel] tool-calling can provide compelling capabilities that compress latency, especially in real-time voice applications.

If not already, LangChain will soon be regarded as a primitive and weak attempt to coerce LLMs to think before they speak. In the current realm of models that support function-calling, it is unnecessary to use libraries that add complexity to what is quickly becoming a simple architecture. In fact, “agentic” libraries may create more problems than they solve as the purveyors of real-time voice APIs running on web sockets compress latency and reduce application complexity.

Achieving agentic precision and determinism isn't as tricky [architecturally] as you may have understood. Creating an agent is almost effortless through prompting. Calling functions based on conversational conditions and returning data to an agent is equally simple. Writing tool functions that perform deterministic computations, data lookups, or database queries, aka software, is backed by lots of experience, existing business logic, and an abundance of engineering skills.

"Agentic depth" is also well within reach of anyone who can craft good prompts and write good functions because functions can spin up other asynchronous agents. Coupling this near-effortless chaining of LLM->function->LLM->callback instantly empowers developers with the ability to use an army of agents to solve problems with extreme granularity.

I have many more thoughts on the trajectory of tool-calling, voice, and real-time agents, but I have little time to dive deep into specifics. Please help me write the conclusion of this post by asking me questions I cannot resist answering. ;-)

Share